Tensor: What It Is, Its AI Role, and Google's G5 Strategy

The world of AI processing just got a lot more interesting, and perhaps, a good deal more complicated. Two significant research drops hit the wires recently, both aimed at the very heart of how we crunch the massive numbers that drive artificial intelligence. On one side, we have the dazzling promise of light-speed computation from Shanghai Jiao Tong and Aalto Universities, dubbed Parallel Optical Matrix–Matrix Multiplication (POMMM). On the other, Microsoft Research brings us MMA-Sim, a deep dive into the almost maddeningly subtle numerical inconsistencies plaguing our current GPU workhorses. So, is the future of AI processing finally clear, or are we just staring into a brighter haze? My analysis suggests it’s very much the latter.

The Luminous Promise of Optical Processing

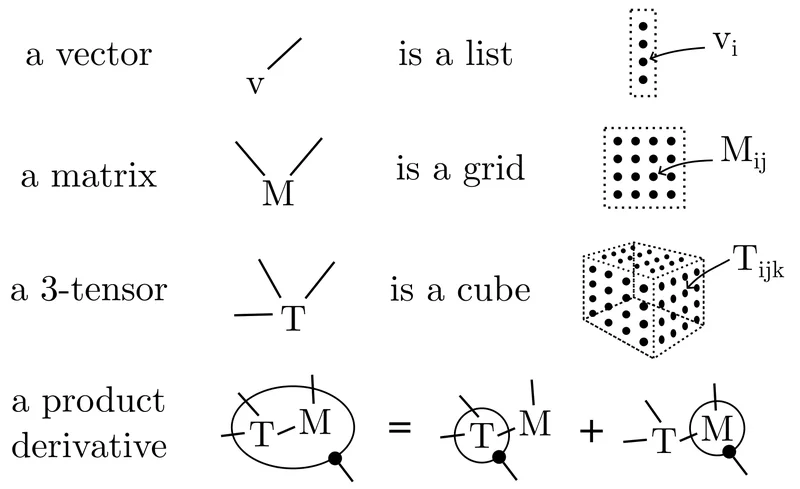

Let’s start with the flash and sizzle. The POMMM research, published in Nature Photonics (see Direct tensor processing with coherent light - Nature), paints a compelling picture. Imagine tensor processing – that foundational operation underpinning nearly every modern AI model, from image recognition to natural language processing – happening not with electrons crawling through silicon, but with light. This isn't just about faster clocks; it's a fundamental shift. The team claims POMMM can perform these complex matrix–matrix multiplication (MMM) operations, the very core of tensor ai, in a "fully parallel" manner, generating all values simultaneously in a single shot. They’ve even built a proof-of-concept using standard optical components, validating it against GPU-based MMM.

The implications, if this scales cleanly, are enormous. We’re talking about potentially bypassing the traditional bottlenecks of memory bandwidth and power consumption that plague GPU tensor cores. Yufeng Zhang from Aalto University put it bluntly: POMMM performs "GPU-like operations (convolutions, attention layers) at the speed of light, using light's physical properties for simultaneous computations." Zhipei Sun, also from Aalto, envisions it integrated onto photonic chips for low-power AI tasks. This isn't just an incremental improvement; it’s like replacing a piston engine with a warp drive. The theoretical computational advantage over existing optical computing paradigms is substantial, and their prototype achieved a practical energy efficiency of 2.62 GOP J−1 (that’s a lot of operations per joule). Even with some non-negligible errors – their mean absolute error was less than 0.15, and normalized root-mean-square error less than 0.1 – models trained with POMMM reportedly achieved inference accuracy comparable to GPU references. This sounds like the silver bullet, doesn't it? A new era for ai news today, perhaps.

The Gritty Reality of Digital Precision

But before we all start liquidating our NVIDIA stock, let's inject a dose of reality, courtesy of Microsoft Research. Almost simultaneously with the POMMM announcement, their team unveiled MMA-Sim. This isn't about a new hardware paradigm, but a microscope focused squarely on the hardware we already use. Specifically, it's a "bit-accurate reference model" that meticulously simulates the arithmetic behavior of matrix multiplication units across ten different GPU architectures from both NVIDIA and AMD. Why does this matter? Because the internal arithmetic of these specialized accelerators, like NVIDIA Tensor Cores, is typically undocumented. It's a black box.

What MMA-Sim revealed, as detailed in Mma-sim Reveals Bit-Accurate Arithmetic Of Tensor Cores Across Ten GPU Architectures - Quantum Zeitgeist, is both fascinating and deeply unsettling for anyone relying on consistent, reproducible AI results. Subtle differences in how these accelerators handle floating-point calculations – specifically FP8, FP16, BF16, and TF32 instructions – introduce numerical inconsistencies. We’re talking about issues with accumulation precision and rounding that can lead to different results across different runs or even different GPUs. These aren't just minor quirks; they arise from factors like fused multiply-add (FMA) operations and varying accumulation orders. My analysis of these discrepancies (which, to be more exact, range from small rounding errors to potentially significant deviations over many iterations) suggests they can compromise the reliability of deep neural network training and inference.

And this is the part of the report that I find genuinely puzzling: how can we confidently compare "inference accuracy" of a nascent optical system to GPU references when those very GPU references themselves are exhibiting subtle, yet critical, numerical discrepancies? It’s like trying to calibrate a new, potentially revolutionary scale against another scale that sometimes gives slightly different readings depending on the time of day or the specific model. The MMA-Sim team plans to release their tool open-source, which is a fantastic move for transparency. But it also underscores a foundational problem that has largely been swept under the rug: the "ground truth" of digital computation in AI isn't as perfectly stable as many assume. How do we ensure that the "comparable accuracy" of POMMM is truly robust, and not just comparably consistent with an already inconsistent baseline? It's a critical methodological critique that needs addressing.

Navigating the Computational Crossroads

So, where does this leave us? We have a genuine breakthrough in optical computing that promises light-speed, energy-efficient tensor operations, potentially creating a new generation of optical computing systems. The ability to directly deploy GPU-trained networks like CNNs and ViTs onto an optical platform, supporting multi-channel convolution and multi-head self-attention, is a powerful argument for its practical utility. It’s an exciting new car, sleek and fast, but still in its prototype phase.

On the other hand, we have Microsoft pulling back the curtain on the complex, sometimes messy, internal workings of our current high-performance tensor core engines. They're not just finding bugs; they're revealing a nuanced landscape where "bit-accurate" isn't a given, and architectural differences between an NVIDIA torch tensor and an AMD counterpart can lead to real numerical divergence. This is the well-worn, incredibly powerful, but increasingly complex engine we’ve been relying on.

My take? The future isn't clear. It's a brighter haze, certainly, because the potential of optical computing is undeniably brilliant. But the path forward is anything but straightforward. We can’t simply swap out GPUs for optical chips without fully understanding the impact of their inherent error rates, however low, especially when our existing digital systems already wrestle with precision. The real challenge isn't just building faster hardware; it's ensuring that the numbers it crunches are not just fast, but also reliably, consistently right. This dual revelation forces us to confront not just how we compute, but what we mean by computational accuracy in the age of AI.